An Order-of-Magnitude Leap for Accelerated Computing

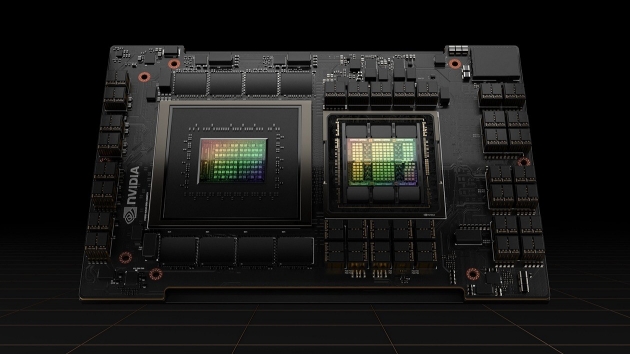

The NVIDIA H100 GPU delivers exceptional performance, scalability, and security for every workload. H100 uses breakthrough innovations based on the NVIDIA Hopper™ architecture to deliver industry-leading conversational AI, speeding up large language models (LLMs) by 30X. H100 also includes a dedicated Transformer Engine to solve trillion-parameter language models.

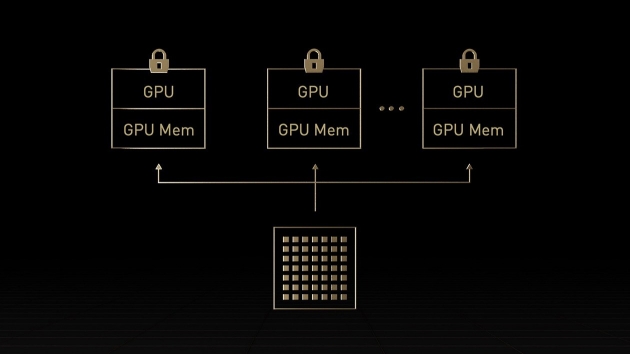

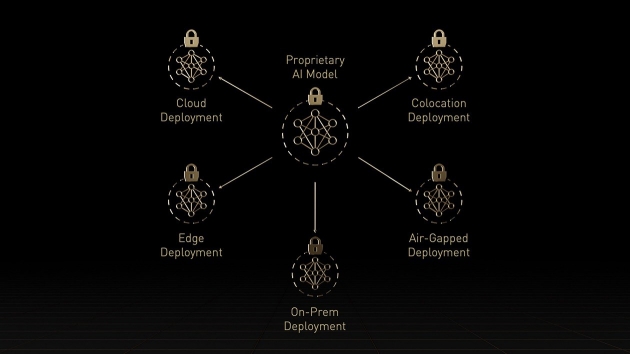

Securely Accelerate Workloads From Enterprise to Exascale

Up to 4X Higher AI Training on GPT-3

Projected performance subject to change. GPT-3 175B training A100 cluster: HDR IB network, H100 cluster: NDR IB network | Mixture of Experts (MoE) Training Transformer Switch-XXL variant with 395B parameters on 1T token dataset, A100 cluster: HDR IB network, H100 cluster: NDR IB network with NVLink Switch System where indicated.

Up to 30X Higher AI Inference Performance on the Largest Models

Megatron chatbot inference (530 billion parameters)

Projected performance subject to change. Inference on Megatron 530B parameter model based chatbot for input sequence length=128, output sequence length =20 | A100 cluster: HDR IB network | H100 cluster: NVLink Switch System, NDR IB

Exascale High-Performance Computing

Projected performance subject to change. 3D FFT (4K^3) throughput | A100 cluster: HDR IB network | H100 cluster: NVLink Switch System, NDR IB | Genome Sequencing (Smith-Waterman) | 1 A100 | 1 H100

| H100 SXM | H100 NVL | |

|---|---|---|

| FP64 | 34 teraFLOPS | 30 teraFLOPs |

| FP64 Tensor Core | 67 teraFLOPS | 60 teraFLOPs |

| FP32 | 67 teraFLOPS | 60 teraFLOPs |

| TF32 Tensor Core* | 989 teraFLOPS | 835 teraFLOPs |

| BFLOAT16 Tensor Core* | 1,979 teraFLOPS | 1,671 teraFLOPS |

| FP16 Tensor Core* | 1,979 teraFLOPS | 1,671 teraFLOPS |

| FP8 Tensor Core* | 3,958 teraFLOPS | 3,341 teraFLOPS |

| INT8 Tensor Core* | 3,958 TOPS | 3,341 TOPS |

| GPU Memory | 80GB | 94GB |

| GPU Memory | Bandwidth | 3.35TB/s | 3.9TB/s |

| Decoders | 7 NVDEC 7 JPEG | 7 NVDEC 7 JPEG |

| Max Thermal Design Power (TDP) | Up to 700W (configurable) | 350-400W (configurable) |

| Multi-Instance GPUs | Up to 7 MIGS @ 10GB each | Up to 7 MIGS @ 12GB each |

| Form Factor | SXM | PCIe dual-slot air-cooled |

| Interconnect | NVIDIA NVLink™: 900GB/s PCIe Gen5: 128GB/s | NVIDIA NVLink: 600GB/s PCIe Gen5: 128GB/s |

| Server Options | NVIDIA HGX H100 Partner and NVIDIA- Certified Systems ™ with 4 or 8 GPUs NVIDIA DGX H100 with 8 GPUs | Partner and NVIDIA-Certified Systems with 1–8 GPUs |

| NVIDIA AI Enterprise | Add-on | Included |